Temporal Dithering Sensitivity - My Solution

AlanSmith I'm curious where that displayspecifications site is getting their information from. They list my monitor (LG 24GQ50F) as true 8 bit, but I can see what appears to be dithering.

I don't think it's coming from the source device since I'm using a Intel iGPU, which don't dither, or so I've read on here.

silentJET85 there is no Intel iGPU that does not dither, specially the newer ones.

so many people have problems whit integrated graphics chips especially Intel. try a dedicated nvidia gpu whit your screen and set it up from Nvidia CP to run on Nvidia colors 8BIT FULL RGB and you should have real 0 dither then whit a true 8bit screen

- Edited

silentJET85 The LG 24GQ50F does not use PWM (Pulse Width Modulation) to regulate backlight brightness at any level. Instead, DC (Direct Current) is used to moderate brightness. The backlight is therefore considered ‘flicker-free’, which will come as welcome news to those sensitive to flickering or worried about side-effects from PWM usage. The exception to this is with ‘1ms Motion Blur Reduction’ active, a strobe backlight setting which causes the backlight to flicker in sync with the refresh rate of the display. Read the overview on the monitor. (1) You can disable "flickering". Select [Menu] → [Game Control] → [1ms Motion Blur Reduction] and press [OFF]. (2) Due to "Response time," the eyes can also get tired. Better to turn it off. (3) Then turn on Freesync Premium. What is strobe backlight? Read from here.

AlanSmith Yeah, I bought it because the reviews said it was flicker-free. But it still makes me dizzy, and I see subtle flicker/snow.

LED light in general bothers me, so I ended up taking the monitor apart and removing the LED backlight. Now I use sunlight or incandescent lamps to light the screen. It's an improvement over the LED, but I can still see the same flicker, and it still makes me dizzy after a while, so I assume it must be dithering or inversion.

- Edited

silentJET85 your lighting it up from the backside ? The eazeye monitor is basically that but a polished product  that's a good idea. I was going to try that as a DIY but waiting on my eazeye instead to come in

that's a good idea. I was going to try that as a DIY but waiting on my eazeye instead to come in

jordan Yep, the Eazeye was one of my inspirations for it. I can't justify spending that much money right now, so I built my own.

Here's a short video I recorded of it: https://www.youtube.com/watch?v=XHJx7D-ttdM&list=PLjWOpadBWIjVwmUxnnkjyZOP9XJYOBAum

silentJET85 yeah I get that. I'm so desperate for a good screen so figured why not. I left a comment on your vid

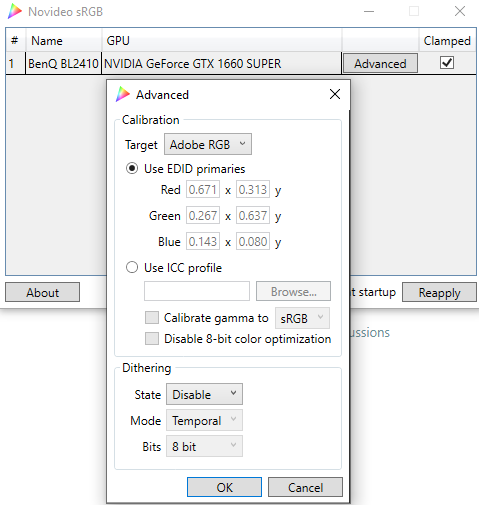

Hello, has anyone tested the novideo_srgb app to disable dithering?

AlanSmith Please don't mislead people. The website that was brought by you has nothing in common with official or real specifications. It contains a lot of fake information. Nobody should believe it. This, for example another link when you can check the answer from a real official representative from Dell

But, there is another link. that I can offer to your attention

Let's check all the information from Google and make a fact-checking before bringing some piece of information here

- Edited

Dell E2715H (FullHD) IPS 6bit+FRC

Dell U2715H (QuadHD) IPS 8bit

These are two different monitor models. Look at your model (sticker behind the monitor)

In 2015 sold my Dell U2711 (CCFL) 140W. Very hot monitor! But there was no eye fatigue.

- Edited

silentJET85 Your monitor is not 8 bit at all, display specs is wrong. If you check your panel **[M238HVN02.0 ](https://www.panelook.com/M238HVN02.0_AUO_23.8_LCM_overview_52037.html "AUO M238HVN02.0")**on panelook it's Display Colors: 16.7M (6-bit + Hi-FRC) which means bad dithering and unlikely you can get rid of it completely.

LG 27UP850N-W - best monitor for eye care!

I can work with it all day long even with new macs with Apple chips (connected via type-c)

VSABALAIEV78 that uses FRC (8+2frc) so it's gonna dither. Best to leave HDR disabled to leave it as 8bit

JTL how did you manage to disable dithering ?

gregtsakil9 Attempting to visualize dithering with zoomed into camera shots is the wrong approach.

if I remember right, this was accomplished with nvidia-xconfig

JTL can we do that setting in windows?

gregtsakil9 Don't know about Windows I'm afraid.